Hi all,

I started exploring ITFlow today—what an awesome tool! Big thanks to everyone involved in building it. To give back to the community, I wanted to share a quick guide on integrating a local Ollama instance for AI features. I didn’t spot this in the docs or forums, so hopefully this helps someone (and maybe gets added to the official documentation?).

Here’s how I set it up:

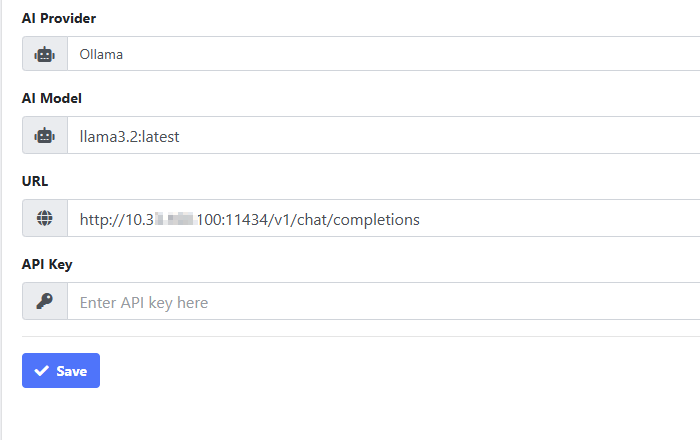

AI Provider: Ollama

AI Model: Depends on what you’ve installed—run ollama list in your terminal on the Ollama host to see available models (e.g., llama3.1).

URL: http://X.X.X.X:11434/v1/chat/completions (replace X.X.X.X with the IP of your Ollama server; use localhost if it’s on the same machine as ITFlow).

API Key: Leave it blank—Ollama doesn’t require it locally.

Check out the screenshot below for how it looks in ITFlow’s settings. I have yet to test it with ticket response generation, but it’s a nice free alternative.

Would love feedback or tips if anyone’s done this too! Also, if a dev or doc contributor sees this, maybe we could add an Ollama section to the AI integration page?

Thanks again for this fantastic tool!